Data Security in the Age of AI: Protecting Your Business Without Stifling Innovation

Here’s a number that should make every business leader pause: 78% of organizations are now using AI, according to Stanford’s 2025 AI Index. But here’s the catch—nearly 40% of employees share confidential data with AI platforms without approval, based on research from CybSafe and the National Cybersecurity Alliance.

The AI revolution isn’t coming. It’s here. And it’s happening with or without your IT department’s blessing.

The Shadow AI Problem Nobody Wants to Talk About

Remember when your biggest security worry was someone leaving their laptop at a coffee shop? Those days feel quaint now.

Today’s challenge? Shadow AI—the unauthorized use of AI tools that bypass corporate governance entirely. IBM’s 2025 Cost of a Data Breach Report drops a sobering statistic: security incidents involving shadow AI account for 20% of all data breaches globally, adding an average of $670,000 to breach costs compared to companies with minimal shadow AI exposure.

“97% of organizations experiencing AI-related breaches lacked proper AI access controls,” notes IBM’s latest security research.

Why are employees going rogue? Simple. They’ve discovered that ChatGPT can draft that report in 10 minutes instead of two hours. Or that Claude can analyze customer feedback faster than any manual process. The productivity gains are too tempting to ignore—even if it means pasting sensitive company data into a public AI model.

The Real Cost of Unprotected AI Usage

Let’s get specific about what’s at stake:

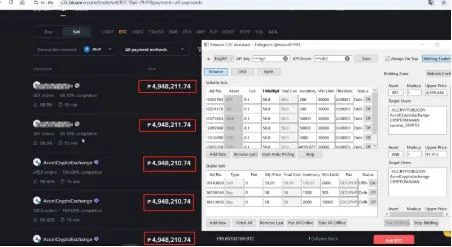

- Intellectual Property Exposure: Samsung learned this the hard way when employees inadvertently compromised proprietary source code by inputting it into ChatGPT

- Compliance Violations: With regulations like the EU AI Act mandating AI asset inventories, undocumented AI use becomes a legal liability

- Data Training Risks: Many consumer AI tools train on user inputs—your company’s strategic plans could become part of a public model

- Supply Chain Vulnerabilities: Most AI breaches originate through compromised APIs and third-party integrations

Building a Framework That Actually Works

Here’s what successful companies are doing differently. They’re not trying to stop the AI wave—they’re learning to surf it safely.

1. Embrace Strategic AI Adoption

Companies with formal AI strategies report 80% success rates in AI adoption, compared to just 37% for those without, according to Writer’s 2025 enterprise survey. The message? Don’t let AI adoption happen by accident.

Start by understanding what your teams actually need. Marketing might benefit from content generation tools. Engineering needs code assistants. Finance wants predictive analytics. Each department has different risk profiles and data sensitivity levels.

2. Deploy Enterprise-Grade Solutions

Consumer AI tools weren’t built for corporate data protection. That’s where specialized solutions come in. Advanced AI data security platforms can trace data lineage—understanding not just what data exists, but how it moves, transforms, and gets shared across AI systems.

Modern protection goes beyond simple blocking. It’s about intelligent controls that understand context. A marketing team member sharing campaign ideas with AI? Probably fine. The same person uploading customer financial records? That triggers an alert.

3. Implement Intelligent Detection

Traditional security tools scan for patterns and keywords. But AI-generated content doesn’t follow predictable patterns. You need something smarter.

AI-powered detection systems like Linea use Large Lineage Models to understand risk based on data movement patterns, not just content. They can distinguish between an employee using AI for legitimate productivity gains versus someone exfiltrating sensitive information.

4. Create an AI-First Security Culture

Policies matter, but culture matters more. 81.8% of IT leaders now have documented AI governance policies, reports Zylo’s 2025 SaaS Management Index. But policies without buy-in are just documents.

Instead of ThisTry This”AI tools are banned””Here are our approved AI tools with enterprise security””Don’t share company data””Use these guidelines to classify data before AI processing””Report violations to IT””Let’s discuss your AI needs and find secure solutions”The Path Forward

McKinsey’s research reveals that over 80% of respondents aren’t seeing tangible bottom-line impact from AI yet. Why? Because they’re stuck in the experimentation phase—often due to security concerns slowing adoption.

The winners in this AI race won’t be companies that block everything. Nor will they be those who leave their data exposed. Success belongs to organizations that build comprehensive data security frameworks enabling safe, productive AI use.

Think of it this way: You wouldn’t let employees drive company vehicles without licenses and insurance. Why let them pilot AI tools without proper safeguards?

The age of AI demands a new approach to data security. One that’s proactive, not reactive. Enabling, not restricting. Because in 2025, the question isn’t whether your employees will use AI—it’s whether they’ll use it safely.