Computer Vision in Manufacturing: AI-Driven Automation, Quality Control, and Efficiency Breakthroughs

Why This Matters Right Now

Manufacturing floors are in the middle of something genuinely transformative. You’ve got labor shortages getting worse, customers who won’t tolerate defects anymore, and pressure from competitors who are already moving faster. Into this chaos walks computer vision—essentially, teaching machines to see and understand what they’re looking at, then act on it.

It sounds sci-fi until you realize it’s already happening. Factories are catching defects a human inspector would miss. Robots are working in messy, unpredictable environments. Quality departments are getting real-time data instead of reports that come too late to matter. By the time we hit 2026, this won’t be a nice-to-have—it’ll be table stakes.

What strikes me most about this shift is how it’s not about replacing people. It’s about freeing them from mind-numbing work so they can actually think.

What Computer Vision Actually Does

Let’s be clear about what we’re talking about here. Computer Vision in Manufacturing is AI that looks at images or video streams and understands what’s happening. A camera feeds data in, algorithms chew through it, and—boom—you get insights or automatic actions. That’s the basic loop.

In a manufacturing context, here’s what that looks like in practice:

The system captures visual data through whatever cameras or sensors make sense for your setup. Then it processes those frames using machine learning models that have learned what normal looks like, what defective looks like, and everything in between. From there, it either tells someone what it found, or it triggers something to happen automatically. A robot repositions. A conveyor stops. An alert goes to the quality team.

The reason this matters in manufacturing specifically is that your operations are inherently visual. Things are moving. Things are getting assembled. Welds are happening. Paint is going on. Parts are sitting in inventory. All of that creates mountains of visual data, and most of it never gets properly analyzed because humans can’t watch everything at once. Computer vision turns that pile of information into something actually useful.

The Major Applications (And Why They Matter)

Quality Control: Where Computer Vision Has Its Biggest Impact

If there’s one area where this technology shines, it’s catching defects before they reach customers. Honestly, it’s not even close.

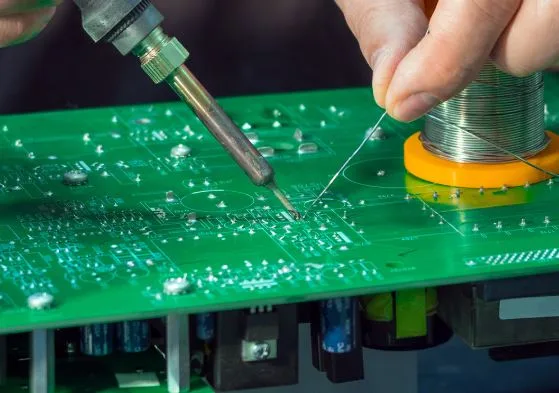

Here’s what it can spot: surface flaws, cracks, dents, missing components, paint inconsistencies, solder joints that didn’t flow right, misaligned parts. The specifics depend on your industry, but the pattern is the same. A human inspector gets tired, squints, misses something. A computer vision system doesn’t get tired. It doesn’t have bad days. It just keeps looking at every single unit and making the same call, every time.

Think about electronics manufacturing. Those solder joints are tiny and the stakes are high—a bad joint means a product fails in the field, and that’s expensive. A camera with the right resolution can look at a board in milliseconds and flag problems that’d take a human technician minutes to spot, if they spotted them at all.

In automotive, the stakes are different but just as real. A dent in a door panel, paint that wasn’t applied evenly, a weld that didn’t fully fuse. Miss these on the line and you’re either building recalls or shipping quality problems to dealers. Computer vision systems run at real-time speeds and catch this stuff before it becomes a customer problem.

The comparison is pretty stark, actually:

A human inspector gets fatigued. A system doesn’t. Human accuracy varies depending on who’s looking and how they’re feeling. A trained model is consistent. A person can check maybe a handful of units per hour if they’re being thorough. A camera can process hundreds or thousands. And micro-defects? Some of them are honestly invisible to the naked eye. Technology sees them.

Robotics That Can Actually Think

Robots used to require everything to be perfectly positioned. Part goes in bin, you program the exact coordinates, robot grabs from those coordinates. Helpful, but rigid. Fragile. One variance and the whole thing fails.

Add vision to that equation and suddenly you’ve got something different. A robot can now see where a part actually is, not where you told it to be. It can pick random components out of a chaotic bin because it’s analyzing the scene in real time. It can adapt its grip based on what it sees. It can even adjust its welding pattern or painting stroke based on the actual contours of what’s in front of it.

This unlocks automation in places that were previously off-limits. That bin-picking problem I mentioned? Impossible without vision. Truly. With it? Standard practice now.

Spotting Machine Trouble Before It Happens

Predictive maintenance has been the dream for years—catch the problem before it breaks and you’ve won. Computer vision makes this actually doable.

Stick an infrared camera on your equipment and it tells you where heat is building up. Mount regular cameras and they catch abnormal vibrations, misalignments, leakage. The system learns what baseline looks like and flags deviations. A bearing that’s starting to fail doesn’t go from fine to catastrophic overnight. It shows signs. Vision systems catch those signs and your maintenance team can actually be proactive for once, rather than scrambling after something explodes.

Other Applications Worth Mentioning

Safety monitoring has gotten smarter—cameras can actually detect when someone walks into a restricted area without proper PPE and trigger alerts. That’s real-time protection, not a safety video that nobody pays attention to.

Inventory and material flow tracking is less glamorous but genuinely useful. Cameras watching conveyors and warehouses feed data into your planning systems so you’re not flying blind about what’s actually in motion.

And production analytics—being able to measure cycle times, spot bottlenecks, identify where delays are actually happening, all without anyone manually recording anything. That’s intelligence you can actually act on.

How This All Works: The Technical Reality

The system has basically three layers working together.

First, you need to capture the right image. That means choosing cameras carefully—resolution matters, but so does frame rate and sensor type. 3D cameras for depth, infrared for thermal issues, line-scan for really fast-moving stuff. Get this wrong and even brilliant algorithms downstream will struggle.

Second is the AI layer. Convolutional neural networks do most of the heavy lifting here. Object detection models like YOLO or Faster R-CNN are standard. For more complex problems, you might use semantic segmentation or even vision transformers. Anomaly detection algorithms are their own category—teaching systems to flag things that don’t match the normal pattern, even if you’ve never seen that specific defect before.

Finally, you have to decide where the processing actually happens. Edge deployment—running AI directly on the camera or a local box—is fastest but requires more sophisticated hardware. Cloud-based systems give you unlimited computing power and easier updates, but you’ve got latency and security considerations. For most manufacturing QC applications, you’ll end up with edge deployment because you need the speed.

Practically Speaking: How to Actually Make This Work

Start simple. Pick something that’s clearly a problem right now. Maybe surface defects are costing you, or you’re spending money on inspection labor that never scales. Focus there first. Quick wins build momentum and funding appetite for bigger projects.

Data is everything—and I mean that. Collect lots of images. Label them properly. If you’re cutting corners here, your whole system suffers. And you need variety. Normal parts, slightly defective parts, catastrophically defective parts, edge cases that are weird and unusual. That variety teaches the system to be robust.

Hardware setup matters more than people expect. Inconsistent lighting destroys vision systems. Poor camera placement means you’re not actually seeing what you need to see. Bad frame rate and you miss fast-moving defects. This isn’t glamorous engineering work, but it’s where so many deployments actually fail. You have to get it right.

Connect it to your systems. A vision system that just flags defects in isolation isn’t that valuable. If it hooks into your SCADA, your MES, your robots, your quality database—then you’ve got an actual system where things can happen automatically and systematically. That’s where the real ROI kicks in.

And once you’ve got it running, actually monitor how it’s performing. False positives creep in. Models drift. You hit edge cases you didn’t train for. Run it like you’d run any piece of critical infrastructure.

What Leaders Are Actually Saying About This

The quality folks I know have basically one message: computer vision changes how you think about defect prevention. It’s not just about catching problems—it’s about seeing patterns you couldn’t see before, understanding root causes better, actually protecting your brand instead of just firefighting quality issues as they show up.

On the robotics side, engineers are excited because suddenly you can automate things you couldn’t before. Unstructured environments, variation, complexity—none of that shuts you down anymore.

And honestly, plant managers are surprisingly positive about the workforce impact. This technology doesn’t eliminate jobs. It relocates them. You’re not doing mindless inspection anymore. You’re troubleshooting why something flagged, managing the system, making higher-judgment calls. People are getting better jobs, not no jobs.

The Honest Comparison: Before and After

What traditional manufacturing does, versus what’s possible now—the gap is significant.

Quality control used to be manual and intermittent. Now it’s real-time and consistent. Safety used to be mostly reactive. Now you can actually prevent incidents. Robots used to be inflexible and couldn’t handle variation. Now they adapt. Maintenance was always scheduled based on time, not actual condition. Now you can base it on reality. And analytics used to show up weeks later in reports. Now you’re making decisions based on what’s happening right now.

Real Examples, Real Industries

Automotive plants are using this to catch weld defects and paint issues with near-perfect accuracy. Electronics manufacturers are spotting tiny solder joints and component misalignments at the micro scale. Pharmaceutical operations verify labels, check blister pack integrity, confirm tablet shapes. Food and beverage companies catch foreign objects and packaging defects. Heavy manufacturing monitors thermal signatures and vibration patterns that suggest equipment is degrading.

These aren’t theoretical applications. These are things that are working at scale right now.

Where This Is Going

Generative AI is starting to play a role—you can use it to create synthetic training data or simulate defects that you can’t easily capture in photos. That’s useful when you need to train systems to handle rare but critical problems.

The factories of the future probably won’t have just vision. They’ll combine visual data with sensor readings, acoustic analysis, text logs from maintenance records. All of that together creates a much more complete picture. The system understands not just what something looks like, but how it sounds, what the environment is, what happened historically.

And eventually, you get to fully autonomous production lines. Not autonomous in the roboarmgeddon sense, but in the sense that the line can adjust its own parameters, trigger its own maintenance, correct its own quality issues, predict supply interruptions before they happen. That’s the trajectory this is on.

The Bottom Line

Computer vision is becoming one of those foundational technologies that separates efficient manufacturers from the rest. The accuracy is real—99%+ when it’s set up properly. The speed is real. The cost is becoming realistic. The applications keep expanding. At Azilen Technologies, we see this shift accelerating every day.

If you’re in manufacturing and you’re not thinking about this seriously, you’re already falling behind. The technology is accessible now. The ROI is there. And honestly, waiting longer just means you’re delaying competitive advantage you could be building today.

Questions People Actually Ask

What’s the biggest win here? Real-time defect detection that actually prevents problems, not just catches them after the fact. That changes everything about quality economics.

Will this eliminate my workforce? No. It removes tedious inspection work. Real quality expertise—understanding why something happened, what to do about it, how to prevent it next time—that’s still human.

Is this budget-breaking? Not anymore. Modern hardware and edge AI have made this reasonably affordable. You’re usually looking at payback through reduced scrap and rework.

Which industries see the biggest benefit? Automotive, electronics, pharma, food and beverage, aerospace, and heavy equipment. Basically, anywhere defects are costly or precision matters.

How accurate is this really? With decent training data and proper setup, you’re looking at better than 99%. That sounds better than humans consistently.

How long until you’re up and running? Simple stuff—a few weeks. Complex robotic systems—several months. Depends on the scope.